Blog

AI Algorithms are Doing Video Generation from Text

AI being used to video generation from text

Artificial Intelligence is getting bigger and bigger with each passing month as more number of areas is being opened to broaden its reach and effectiveness. AI is already powering a number of consumer electronics device ranging from the smartphones, computers to IoT devices. Now we will get the video generation from text with the help of the AI at the core.

Generating new videos consistently with the emerging trends is quite a demanding task for the creators. Therefore scientists had started making use of the artificial intelligence for the video generation from text itself wherein it works towards creating generative video.

With this new found research scientists will be able to type out a simple phrase and artificial intelligence will work towards creating a video of that particular scene by carefully analyzing the text.

AI can do video generation from text

The lead scientist who has made video generation from text possible using the artificial intelligence is from the Duke and Princeton University. Scientists had explained that the task of video creation is directly related to the video prediction ability.

In this research scientist has forwarded this task of the predicting what actions should be the part of the video to the artificial intelligence rather than the human mind.

It should be noted that the visual representation happens to showcase a wide range of the human emotion as well as actions than the still pictures and herein AI has been perfected to identify the correct type of the things from the videos.

Teaching and training the AI

In order to train the artificial in successfully performing video generation from text scientist had used a wider range of easily understandable and defined activities to cut out a video.

The activities in which AI was trained to identify included ‘jogging’, ‘riding a bike’, ‘kite surfing’, ‘playing football’, ‘swimming’ etc.

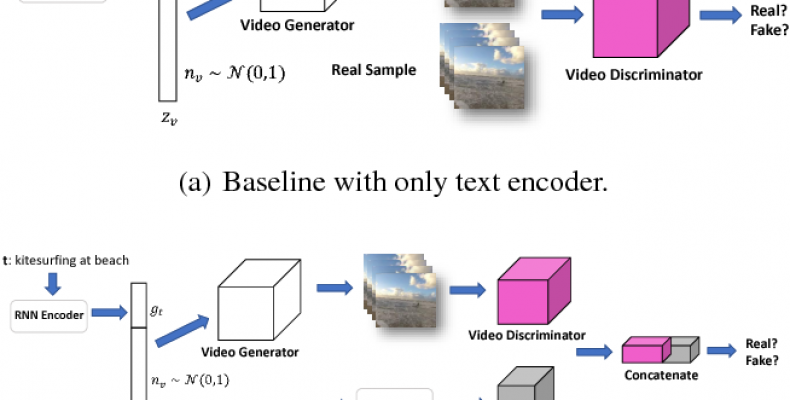

In the later stages the AI carefully studies the clips and learns to identify the varied human emotion from the exiting videos. AI was able to identify millions of emotion with few weeks of training. Once the dataset has been created by the researchers from their SI training exercise they started the two-step process which allows it to stitch together a generative video based on the text.

In the first step AI works toward creating a wide range of gist of the video which is specifically based on the input text. In this step the gist happens to be an image which lends the background colour and even the object layout is created here.

In the second step which is named “discriminator” specifically allows AI to identify the right set of gists to use for creating the video based on the user text-input. If the text input asks “riding on a bike” then all the gists made in the first step will works towards video generation from text showing a person riding a bike. The resulting image from the gists is good enough to pass as a commendable video for the moment but scientists are actively working towards enhancing its core abilities.